Researchers unveiled novel algorithmic architectures pointing toward significant advancements beyond current large language model designs. These technical innovations address existing limitations while enabling capabilities that contemporary systems cannot achieve.

The developments suggest the field approaches another major transition comparable to previous architectural breakthroughs. Understanding these emerging approaches provides insight into how language models may evolve and what new applications might become possible.

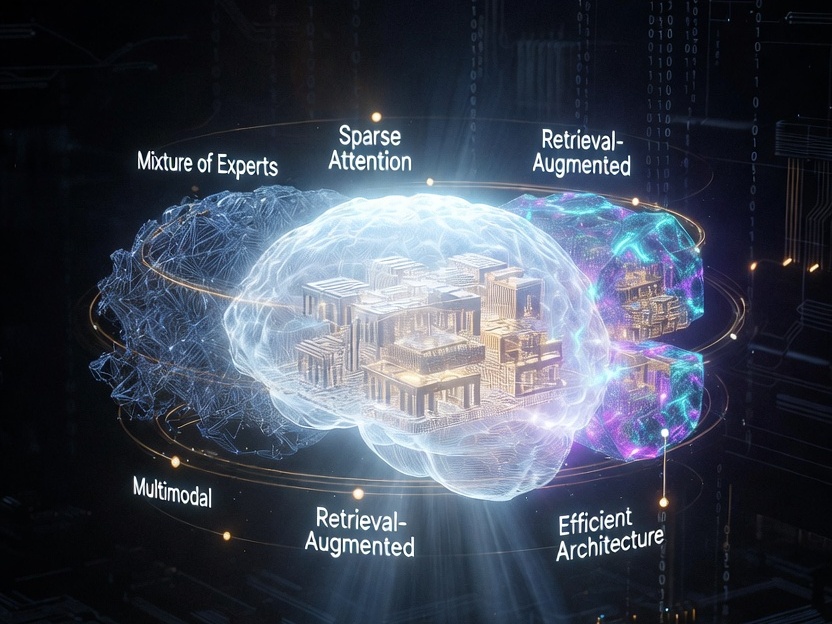

Architectural Innovations

Mixture-of-experts architectures gained prominence by activating only relevant model portions for specific tasks rather than engaging entire networks. According to research from MIT, this approach dramatically improves efficiency while maintaining or exceeding performance of traditional dense models.

Sparse attention mechanisms reduce computational requirements by focusing processing on most relevant input portions. Instead of every token attending to all others, selective attention patterns identify important relationships while ignoring less relevant connections. This enables handling longer contexts with manageable resource consumption.

Retrieval-augmented approaches combine parametric knowledge stored in model weights with external information accessed during generation. Systems query databases or document collections, incorporating retrieved content into responses. This architecture separates factual knowledge from reasoning capabilities, enabling updates without full retraining.

Multimodal Integration

Next-generation architectures natively process multiple input types rather than treating different modalities as separate domains. Unified models handle text, images, audio, and video through shared representations enabling cross-modal understanding.

These systems can answer questions about images, generate pictures from descriptions, or create videos matching textual scripts without separate specialized components. According to Google Research publications, integrated architectures outperform pipeline approaches combining independent models.

Training procedures evolved to handle diverse data types simultaneously. Rather than pre-training on text then adapting to other modalities, unified training develops general representations applicable across domains from the start.

Efficiency and Scalability

Resource requirements constrain current model development. Training largest systems costs millions of dollars and consumes enormous energy. New architectures prioritize efficiency alongside capability improvements.

Compression techniques reduce model sizes while preserving performance. Knowledge distillation transfers capabilities from large teacher models to smaller student versions deployable on resource-constrained devices. Quantization reduces numerical precision requirements, shrinking memory footprints.

These efficiency gains democratize access. Smaller organizations and individual researchers can work with capable models without massive computational budgets. Edge deployment becomes feasible, enabling on-device processing without cloud connectivity.

Reasoning and Planning

Current models excel at pattern matching and text generation but struggle with systematic reasoning and multi-step planning. Emerging architectures incorporate explicit reasoning mechanisms rather than relying solely on learned patterns.

Chain-of-thought prompting demonstrated that encouraging step-by-step reasoning improves performance. New designs build these processes into model structures rather than depending on prompt engineering. Dedicated reasoning modules handle logical inference, mathematical calculations, and sequential planning.

Tool use capabilities enable models to invoke external programs for specialized tasks. Rather than attempting all operations internally, systems can call calculators, search engines, or domain-specific software, combining their language understanding with programmatic precision.

Training and Implementation Evolution

Self-supervised learning advances reduce dependence on labeled data. Models learn from raw text, discovering patterns without human annotation, enabling training on massive datasets impractical to label manually.

Reinforcement learning from human feedback refines behavior aligning outputs with user preferences. Rather than optimizing purely for prediction accuracy, systems learn generating responses humans find helpful and honest.

Continual learning approaches enable models updating knowledge without catastrophic forgetting. Incremental update methods preserve existing capabilities while integrating fresh data.

Novel architectures introduce new complexity. Distributed experts require sophisticated routing mechanisms. Retrieval augmentation demands maintaining external knowledge bases. Multimodal processing increases data requirements and training difficulty.

Evaluation becomes harder as capabilities expand. Standard benchmarks may not capture performance on new task types. Developing appropriate assessment methods parallels architectural advancement.

Looking Forward

These architectural innovations will likely coexist rather than one design dominating all applications. Different use cases favor different approaches based on specific requirements for efficiency, capability, and resource constraints.

The field moves beyond the transformer architecture that dominated recent years while building on its foundations. Next-generation systems will combine multiple innovations addressing current limitations and enabling previously impossible applications as language models continue their rapid evolution.