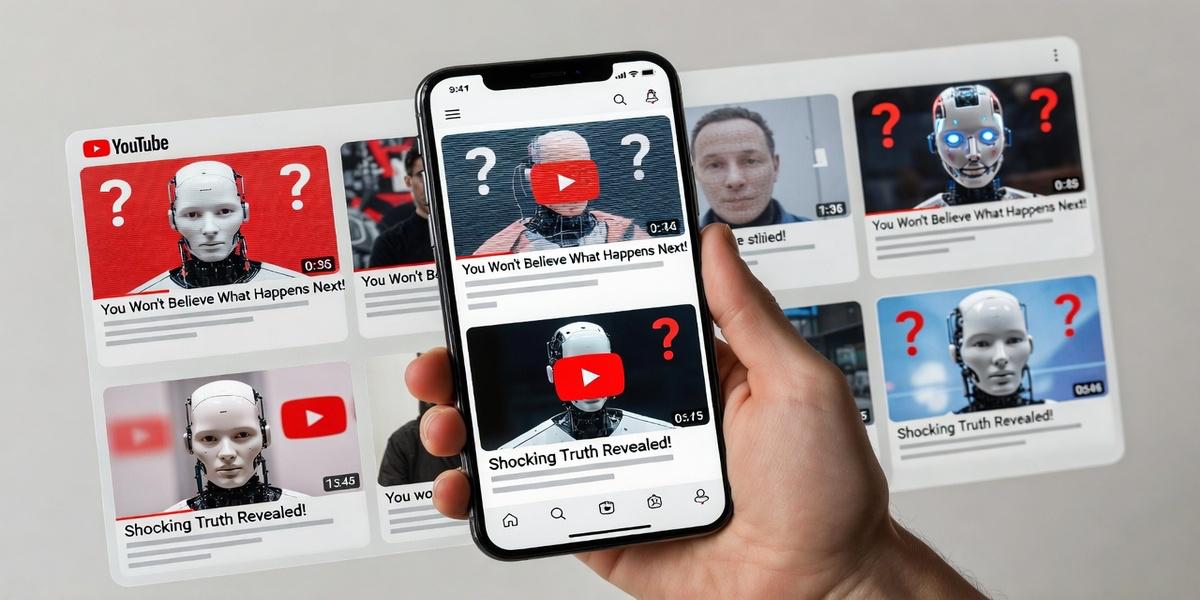

YouTube is serving up a feast of artificial intelligence-generated garbage to its newest users. More than one in five videos shown to fresh accounts consists of what researchers call “AI slop,” according to a new Guardian investigation.

The problem extends beyond annoyance. These low-quality, mass-produced videos are raking in approximately $117 million per year, creating a financial incentive for creators to flood the platform with synthetic content that ranges from misleading to outright nonsensical. The videos often feature robotic voiceovers, recycled stock footage, and information of dubious accuracy, all optimized to game YouTube’s recommendation algorithm rather than provide genuine value.

The Scale of Synthetic Content

The Guardian’s research team created new YouTube accounts to simulate the experience of first-time users. What they found was alarming: the platform’s algorithm consistently pushed AI-generated content into their feeds, often within the first few recommendations. These videos covered everything from health advice to historical “facts,” much of it unverified or misleading.

Common examples include compilations of celebrity deaths that never happened, miracle cure videos promoting unproven health remedies, and historical documentaries filled with fabricated events. Each video is designed to maximize watch time and ad impressions, not accuracy or viewer satisfaction.

How Creators Game the System

The economics are straightforward. AI tools can generate a script, voiceover, and basic video editing in minutes. A single creator can produce dozens of videos daily, each targeting popular search terms and trending topics. The algorithm, designed to keep users engaged, often can’t distinguish between authentic content and AI-generated imitations.

This phenomenon mirrors broader concerns about AI-generated content quality across social media platforms. The term “slop” has emerged in tech circles to describe the deluge of low-effort, AI-produced material that prioritizes quantity over quality, flooding platforms with content that meets algorithmic criteria without serving human interests.

Impact on Legitimate Creators

The flood of AI spam creates real consequences for human creators who invest time and expertise into their content. Their videos must compete for visibility against an endless stream of synthetic alternatives that cost virtually nothing to produce. Some creators report declining viewership and engagement as the algorithm increasingly favors high-volume AI content over quality productions.

Sarah Chen, a science educator with 200,000 subscribers, describes the challenge: “I spend weeks researching and producing a single video. Meanwhile, AI channels pump out ten videos in the same time, all targeting the same keywords. The algorithm doesn’t care which one is accurate.”

YouTube’s Response

YouTube’s policies technically prohibit misleading content and spam, but enforcement remains inconsistent. The platform has introduced some AI disclosure requirements, but these apply primarily to certain categories like altered media depicting real events. Mass-produced educational or entertainment content often slips through unchecked.

A YouTube spokesperson previously told The Verge that the company uses both automated systems and human reviewers to identify and remove spam. However, the scale of AI-generated uploads appears to overwhelm existing moderation systems.

The Broader Implications

The $117 million annual revenue figure highlights how YouTube’s monetization system inadvertently rewards this behavior. Creators can generate hundreds of videos with minimal effort using AI tools, then profit from ad revenue as the algorithm distributes their content to unsuspecting viewers. For new users trying to orient themselves on the platform, this creates a degraded first impression that could affect long-term engagement and trust.

The problem also raises questions about information quality in an AI-saturated media environment. When platforms prioritize engagement metrics over accuracy, users become vulnerable to misinformation presented with the polish and authority of legitimate content.

As AI generation tools become more sophisticated and accessible, the challenge will likely intensify. Without significant changes to content moderation and recommendation algorithms, YouTube risks becoming a platform where synthetic spam drowns out authentic human creativity and expertise